GETTING STARTED

INSURANCE COMPANY STORIES

SERVICE PARTNER STORIES

API BASICS

API ENDPOINTS

COMPONENTS

LEGACY

Events API Introduction

Introduction

This page defines the Event API, a general purpose Scalepoint B2B two-way messaging bridge. Data synchronization between all collaborator's systems is often necessary to automate business processes across organizations. Service requests, orders, invoices and various other document notifications are good examples of events. Scalepoint, our customers and service partners will all benefit from the availability of a unified, secure and reliable data channel for these events. This document's focus is on the external communication as perceived by a single customer or partner, omitting Scalepoint internal message routing and segregation logic definition. This page also does not address the needs of on-demand data fetching and while messaging can be abused with request-response message pairs, it must be not.

Goals

The Event API is to meet the following goals:

- Unified implementation

- Secure communications

- Reliable message delivery

- High availability of services

- High message throughput

- Fast time-to-market for event based integrations

- Low cost of development and maintenance

Problem description

It usually takes a long time to do analysis and design for every new event integration.

It can be naturally hard to settle on the important business process level details: "who" sends the data, "what" data is sent, "when" it is sent and "why". This proposal, however, is to address another significant and important part, the "how" part.

Often, events in a B2B setting are delivered over a custom WebService, batch file over FTP/SFTP, etc... These integrations are often poorly protected, if at all, and are different for every event type. This leads not only to increased time-to-market for new integrations due to the grunt work component of the project, but also complicates maintenance of existing integrations as their number grows.

This is why introducing a generic two-way messaging layer is important. This lets us tackle many concerns, such as security, availability, API documentation just once. The concept isn't new and there are many off-the-shelf brokered and broker-less messaging solutions that do just that. But none of those are truly designed for cross-company-boundary scenarios, especially not where business continuity is important. And our integrations are supposed to work for years once enabled.

Traditional messaging (and any hand-coded push integrations) also all fall short of out-of-the-box disaster recovery and debugging options. In case messages are acknowledged as received by the recepient and discarded due to a code defect, the only way to recover from this is to do re-transmission from the sender (this either has to be supported by the sender, or these past events must be simulated manually).

Solution

Instead of using fire-and-forget event push between the parties and discarding messages after they are delivered to the recipient, we publish them in a log structured storage. They can later be fetched from that store as many times as necessary by the recipient.

Recepients can seek through this persistent event stream at their own pace and instead of acknowledging messages, they simply save current position in the stream locally to then continue from that point on. There's no need to deal with message poisoning in the stream.

Recipient are encouraged to queue up messages from the stream into internal messaging infrastructure (ESB) and implement transient error recovery and message poisoning inside it. Big advantage is provided by message persistence at the boundary, since any given party can recover from application errors in message handling code on their own, by simply re-queing needed messages from the persistent event stream.

As a convenient service built on top of persistent event stream, we then offer configurable Webhooks to simplify implementation for customers. These webhooks preserve the ability to rewind and time travel in the event stream (by means of recreating them) and support multiple partitioned subscribers.

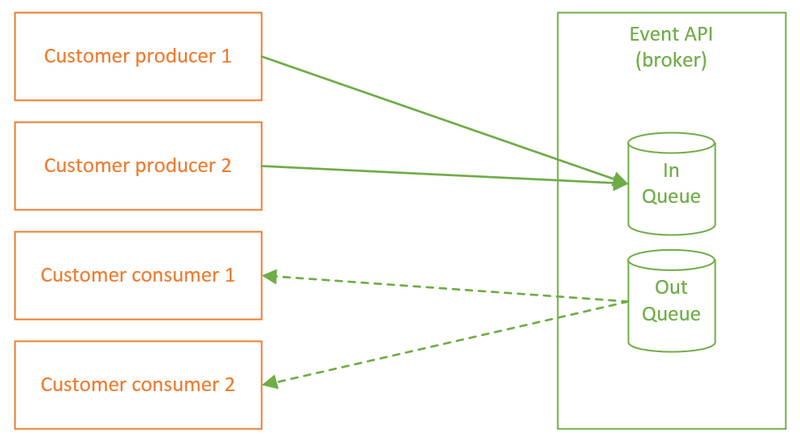

Topology

In order to enable two-way event exchange, we need two event streams per customer or partner: inbound and outbound. It is Scalepoint's LOB applications responsibility to reliably deliver messages to the outbound stream and receive them from the inbound stream and actual details are out of scope of this proposal.

A customer's responsibility is to then receive events from the outbound queue and deliver events to Scalepoint in the inbound queue.

API

API will expose two endpoints:

- end event

- Receive events (with filtering by event type)

and configurable webhook push

- /subscriptions REST resource to be introduced later

Unification

The same event API can be used to send and receive events for all lines of business, even for non case-related data. Internally, events can be filtered and routed based on the payload. Specific event types and business process definitions for each domain are out of scope of this proposal.

Security

All communication should happen strictly over HTTPS.

All Event API endpoints are to be protected with OAuth Bearer tokens, same as existing Unified Create Case. The API client should use the same authentication scheme (and can use the same credentials) as for Unified Create Case, but with a different scope value: "events" instead of "case_integration".

Webhook push will present pre-shared key signed JWT token to the subscriber endpoint. The key is randomly generated during webhook registration and returned once. JWT token might include message hash as a signing mechanism, in this case it will be generated per message and have short TTL (5 minutes). We could also introduce payload encryption (symmetric with same PSK or asymmetric with uploaded public key), if TLS is not enough at some point.

Reliable messaging

API guarantees that once a message is successfully received it is atomically published in the stream and can be safely marked as acknowledged by the sender or removed from it's internal outbound queue, if any.

Recipient can read the same messages as many times as necessary, seeking back and forth.

Both long-polling and Webhooks interfaces currently deliver messages in send time order (time when Event API received event from upstream service), which can be different from event time. Consumer is expected to process incoming messages asynchronously by forwarding them to an internal message queue and implement idempotent handling and out-of-order retries.

Payload format

JSON

Protocols

Send: HTTPS PUT/POST with message in the body

Receive: GET over HTTPS with long-polling. Consumers have to process messages sequentially and save last processed event ID after every message

Webhook subscription: HTTP POST to configured endpoint with message in the body.

Maintenance

A cleanup of historical events, i.e. those older than a year or other agreed period, can be performed regularly.

Monitoring and alerting

For webhooks, since the subscriber might be down or unreachable for reasons outside Scalepoint's control, a support contact with e-mail (phone number?) must be defined when registering the subscription.

Benefits

- Unified implementation

- It is not required to deploy externally-facing endpoints at the customer's side

- The same authentication/authorization scheme and implementation apply to both send and receive

- Easy debugging with event replay. The same events can be read over and over if necessary during debugging

- Simplified coordination for new event exchanges. One party can start sending events before the other is ready to receive them (can catch up later)

Client Implementation

Scalepoint can not only provide documentation for the API, but also libraries and code samples to implement efficient back-pressure and maximum throughput over the API, client-side authentication handling, etc...

Ready to Integrate with Event Api?

Our next chapter describes on how you could integrate with Event Api.